Runtime Systems and Tools:

Dynamic Load Balancing

Our research in load balancing focuses on two primary areas: Object Migration and Seed Balancing.

Object Migration

A major reason slowing deployment of parallel programs is that efficient parallel programs are difficult to write. Parallel programming adds a second dimension to programming: not just when will a particular operation be executed, but where, i.e. what processor will perform it. A vast number of parallelizable applications do not have a regular structure for efficient parallelization. Such applications require load balancing to perform efficiently in parallel. The load in these applications may also change over time, requiring rebalancing. The programmer is left with the choice of either distributing computation haphazardly, producing poorly-performing programs, or spending more development time including load-balancing code in the application.

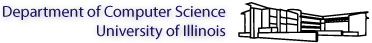

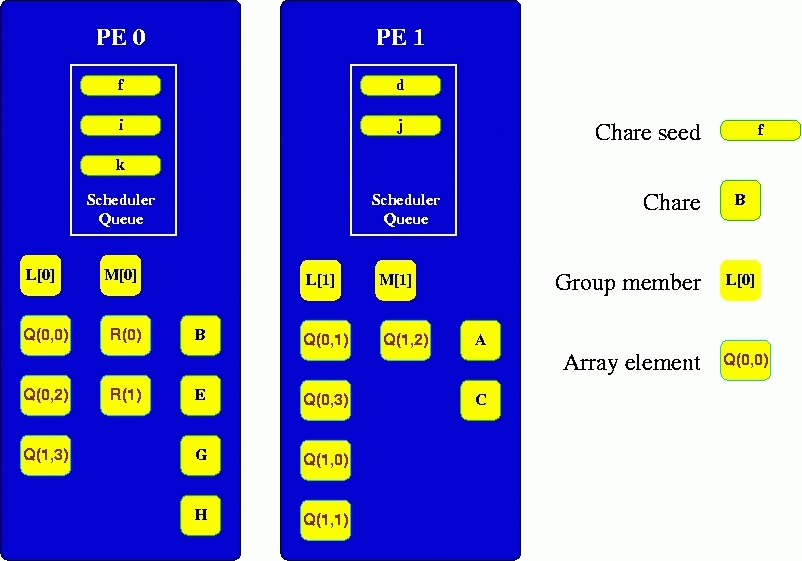

A typical object distribution on two processors in a Charm++ program.

A typical object distribution on two processors in a Charm++ program.

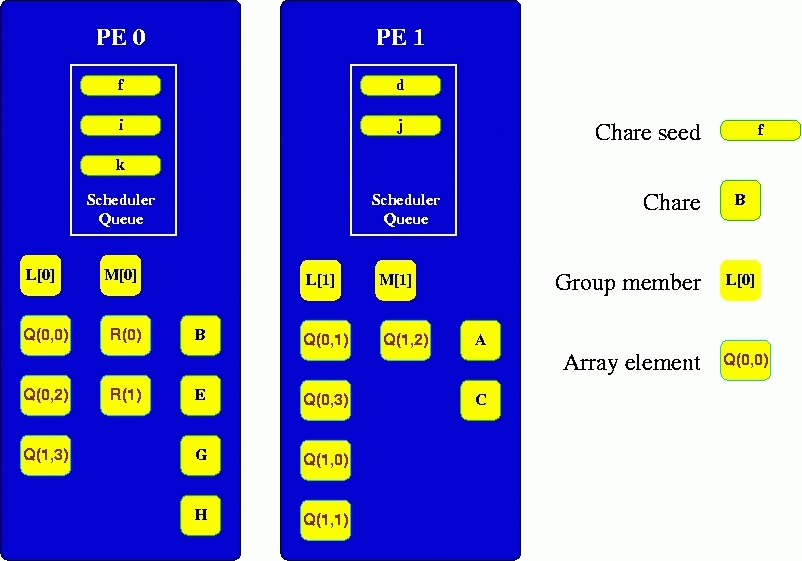

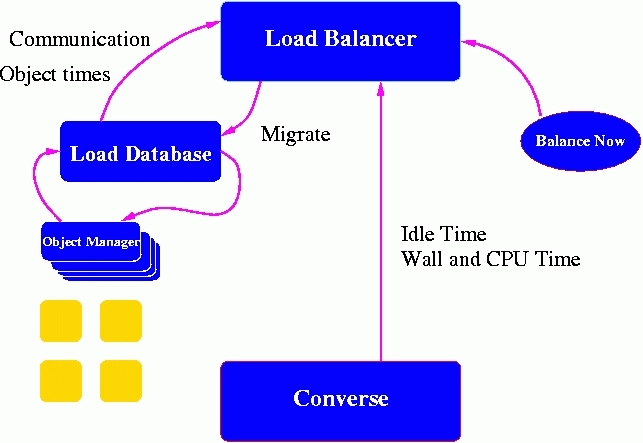

The components and interactions in the load balancing framework.

The components and interactions in the load balancing framework.

In recent years, new types of "parallel" computers have appeared. Networks of commodity workstations are making parallel computation available to an expanding group of researchers. Workstation networks present new issues for the application programmer. Now, in addition to application imbalance, a parallel program must be concerned with background load from other simultaneous users. Parallel programs may run on clusters of workstations on an interactive user's desks, where the primary user only permits parallel computation when the computer is not being used interactively. Finally, computational clusters may expand over time, but with the rapid increase in computational power, new processors are likely to be faster than the older machines that they are supplementing. To maximize throughput, load balancers in parallel applications must account for all these factors.

Work migration is a unified scheme for handling both application-specific and externally-arising load imbalance. The difficulty with migrating work is that either work is repartitioned in an application-specific way, placing the burden on the application programmer, or that automatic migration is supported, but with poor accuracy, due to the lack of application-specific knowledge.

Object migration provides a way of performing accurate, fine-grained automatic load balancing. Objects usually have small, well-defined regions of memory on which they operate, reducing the cost of migration. Using the Charm++ object model, the run-time system measures the work represented by particular objects, rather than deriving execution time from application-specific heuristics. Furthermore, the run-time system records object-to-object communication patterns, so the load balancer can asses the communication impact of migrating particular objects.

With the advent of massively parallel machines like Bluegene/Q and Cray XC40, our recent work has focused on topology-aware migration of objects. We have developed strategies which take into account both the message sizes and the network hop length to minimize the total amount of communication.

Communication latencies form a significant factor in the performance of parallel applications on these large machines. The latencies are primarily due to network contention in the grid and torus networks, which are usually used in these large parallel machines. Our load balancing strategies minimize the impact of topology by heuristically minimizing the number of hops traveled by each communicated byte. They are not network specific and work for all classes of interconnection networks.

Object Migration

- Periodic Load Balancing for bipartite object networks

- Adaptive use of Workstation Clusters

- Optimal Object Migration to handle background load variation

A major reason slowing deployment of parallel programs is that efficient parallel programs are difficult to write. Parallel programming adds a second dimension to programming: not just when will a particular operation be executed, but where, i.e. what processor will perform it. A vast number of parallelizable applications do not have a regular structure for efficient parallelization. Such applications require load balancing to perform efficiently in parallel. The load in these applications may also change over time, requiring rebalancing. The programmer is left with the choice of either distributing computation haphazardly, producing poorly-performing programs, or spending more development time including load-balancing code in the application.

A typical object distribution on two processors in a Charm++ program.

A typical object distribution on two processors in a Charm++ program.

The components and interactions in the load balancing framework.

The components and interactions in the load balancing framework.

In recent years, new types of "parallel" computers have appeared. Networks of commodity workstations are making parallel computation available to an expanding group of researchers. Workstation networks present new issues for the application programmer. Now, in addition to application imbalance, a parallel program must be concerned with background load from other simultaneous users. Parallel programs may run on clusters of workstations on an interactive user's desks, where the primary user only permits parallel computation when the computer is not being used interactively. Finally, computational clusters may expand over time, but with the rapid increase in computational power, new processors are likely to be faster than the older machines that they are supplementing. To maximize throughput, load balancers in parallel applications must account for all these factors.

Work migration is a unified scheme for handling both application-specific and externally-arising load imbalance. The difficulty with migrating work is that either work is repartitioned in an application-specific way, placing the burden on the application programmer, or that automatic migration is supported, but with poor accuracy, due to the lack of application-specific knowledge.

Object migration provides a way of performing accurate, fine-grained automatic load balancing. Objects usually have small, well-defined regions of memory on which they operate, reducing the cost of migration. Using the Charm++ object model, the run-time system measures the work represented by particular objects, rather than deriving execution time from application-specific heuristics. Furthermore, the run-time system records object-to-object communication patterns, so the load balancer can asses the communication impact of migrating particular objects.

With the advent of massively parallel machines like Bluegene/Q and Cray XC40, our recent work has focused on topology-aware migration of objects. We have developed strategies which take into account both the message sizes and the network hop length to minimize the total amount of communication.

Communication latencies form a significant factor in the performance of parallel applications on these large machines. The latencies are primarily due to network contention in the grid and torus networks, which are usually used in these large parallel machines. Our load balancing strategies minimize the impact of topology by heuristically minimizing the number of hops traveled by each communicated byte. They are not network specific and work for all classes of interconnection networks.

Seed Load Balancing

Seed load balancing involves the movement of object creation messages, or "seeds", to create a balance of work across a set of processors. Several variations of strategies are being analyzed. In particular, we distinguish between global strategies, which may result in communication amongst all processors to exchange load information, and neighborhood strategies, which typically impose a dense graph organization on the processors, and restrict communication to neighbors only. Some strategies use averaging of loads to determine how seeds should be distributed, while others use receiver-initiated strategies, where a processor requests work from elsewhere when it is about to go idle. A strategy that places seeds randomly when they are created and does no movement of seeds thereafter is used as a baseline for comparison on numerous benchmarks.People

Papers/Talks

23-02

2022

[MS Thesis]

[MS Thesis]

Extending Paratreet, A Framework For Spatial Tree Base Algorithms, With GPU Kernels and Load Balancers [Thesis 2022]

23-01

2023

[PhD Thesis]

[PhD Thesis]

Vector Load Balancing for High-Performance Parallel Applications [Thesis 2023]

18-03

2018

[Paper]

[Paper]

Adaptive Methods for Irregular Parallel Discrete Event Simulation Workloads [ACM SIGSIM PADS 2018]

18-02

2018

[Paper]

[Paper]

Multi-level Load Balancing with an Integrated Runtime Approach [CCGrid 2018]

17-08

2017

[Paper]

[Paper]

Integrating OpenMP into the Charm++ Programming Model [ESPM2 2017]

17-01

2017

[Poster]

[Poster]

Poster: Automated Load Balancer Selection Based on Application Characteristics [PPoPP 2017]

16-19

2016

[Paper]

[Paper]

Handling Transient and Persistent Imbalance Together in Distributed and Shared Memory [PPL Technical Report 2016]

14-49

2014

[Talk]

[Talk]

Adaptive runtime systems for computational chemistry [ACS 2014]

14-41

2014

[Paper]

[Paper]

Applying Graph Partitioning Methods in Measurement-based Dynamic Load Balancing [CS Res. & Tech. Report 2014]

14-24

2014

[Paper]

[Paper]

Optimizing Data Locality for Fork/Join Programs Using Constrained Work Stealing [SC 2014]

14-19

2014

[Paper]

[Paper]

Position Paper: Power-aware and Temperature Restrain Modeling for Maximizing Performance and Reliability [MODSIM 2014]

13-47

2013

[Talk]

[Talk]

A Distributed Dynamic Load Balancer for Iterative Applications [SC 2013]

13-33

2013

[Paper]

[Paper]

Thermal Aware Automated Load Balancing for HPC Applications [Cluster 2013]

13-26

2013

[Paper]

[Paper]

A Distributed Dynamic Load Balancer for Iterative Applications [SC 2013]

13-17

2013

[PhD Thesis]

[PhD Thesis]

Scalable Message-Logging Techniques for Effective Fault Tolerance in HPC Applications [Thesis 2013]

13-16

2013

[Paper]

[Paper]

Parallel Science and Engineering Applications: The Charm++ Approach [Book 2013]

13-05

2013

[Paper]

[Paper]

Improving HPC Application Performance in Cloud through Dynamic Load Balancing [CCGrid 2013]

12-53

2012

[Talk]

[Talk]

Automated Load Balancing Invocation based on Application Characteristics [Cluster 2012]

12-41

2012

[MS Thesis]

[MS Thesis]

Meta-Balancer: Automated Load Balancing Based On Application Behavior [Thesis 2012]

12-31

2012

[Paper]

[Paper]

A Hierarchical Approach for Load Balancing on Parallel Multi-core Systems [ICPP 2012]

12-29

2012

[Paper]

[Paper]

Automated Load Balancing Invocation based on Application Characteristics [Cluster 2012]

12-20

2012

[Paper]

[Paper]

‘Cool’ Load Balancing for High Performance Computing Data Centers [IEEE TC 2012]

12-11

2012

[Paper]

[Paper]

Work Stealing and Persistence-based Load Balancers for Iterative Overdecomposed Applications [HPDC 2012]

11-38

2011

[Talk]

[Talk]

Dynamic Load Balance for Optimized Message Logging in Fault Tolerant HPC Applications [Cluster 2011]

11-28

2011

[Paper]

[Paper]

Improving Parallel System Performance with a NUMA-aware Load Balancer [CS Res. & Tech. Report 2011]

11-26

2011

[Paper]

[Paper]

Dynamic Load Balance for Optimized Message Logging in Fault Tolerant HPC Applications [Cluster 2011]

11-25

2011

[Paper]

[Paper]

ParSSSE: An Adaptive Parallel State Space Search Engine [PPL 2011]

11-17

2011

[Paper]

[Paper]

Enabling and Scaling Biomolecular Simulations of 100~Million Atoms on Petascale Machines with a Multicore-Optimized Message-Driven Runtime [SC 2011]

11-07

2011

[Paper]

[Paper]

Distributed Memory Load Balancing [Encyclopedia of Parallel Computing 2011]

10-26

2011

[Paper]

[Paper]

A Comparative Analysis of Load Balancing Algorithms Applied to a Weather Forecast Model [SBAC-PAD 2011]

10-20

2010

[Paper]

[Paper]

Periodic Hierarchical Load Balancing for Large Supercomputers [IJHPCA 2010]

10-17

2010

[PhD Thesis]

[PhD Thesis]

Automating Topology Aware Mapping for Supercomputers [Thesis 2010]

10-08

2010

[Paper]

[Paper]

Hierarchical Load Balancing for Charm++ Applications on Large Supercomputers [P2S2 2010]

09-02

2009

[Paper]

[Paper]

Dynamic Topology Aware Load Balancing Algorithms for Molecular Dynamics Applications [ICS 2009]

08-03

2008

[Paper]

[Paper]

Massively Parallel Cosmological Simulations with ChaNGa [IPDPS 2008]

08-01

2008

[Paper]

[Paper]

Overcoming Scaling Challenges in Biomolecular Simulations across Multiple Platforms [IPDPS 2008]

07-12

2007

[MS Thesis]

[MS Thesis]

Application-specific Topology-aware Mapping and Load Balancing for three-dimensional Torus Topologies [Thesis 2007]

07-01

2007

[Paper]

[Paper]

Optimizing Distributed Application Performance Using Dynamic Grid Topology-Aware Load Balancing [IPDPS 2007]

06-05

2006

[Paper]

[Paper]

Multiple Flows of Control in Migratable Parallel Programs [HPSEC 2006]

05-26

2005

[Poster]

[Poster]

Speeding Up Parallel Simulation with Automatic Load Balancing [PPL Poster 2005]

05-18

2006

[Paper]

[Paper]

Topology-Aware Task Mapping for Reducing Communication Contention on Large Parallel Machines [IPDPS 2006]

05-07

2005

[MS Thesis]

[MS Thesis]

Strategies for topology-aware task mapping and for rebalancing with bounded migrations [Thesis 2005]

05-06

2005

[PhD Thesis]

[PhD Thesis]

Achieving High Performance on Extremely Large Parallel Machines: Performance Prediction and Load Balancing [Thesis 2005]

99-06

1999

[Paper]

[Paper]

Branch and Bound Based Load Balancing for Parallel Applications [LNCS 1999]

99-03

2000

[Paper]

[Paper]

Handling Application-Induced Load Imbalance using Parallel Objects [Parallel and Distributed Computing for Symbolic and Irregular Applications 2000]

99-02

1999

[Paper]

[Paper]

Adapting to Load on Workstation Clusters [ Frontiers of Massively Parallel Computation 1999]

98-02

1998

[Paper]

[Paper]

Load Balancing in Parallel Molecular Dynamics [International Symposium on Solving Irregularly Structured Problems in Parallel 1998]

96-07

1996

[Paper]

[Paper]

Automating Runtime Optimizations for Load Balancing in Irregular Problems [CPDPTA 1996]

93-13

1993

[Paper]

[Paper]

A Load Balancing Strategy For Prioritized Execution of Tasks [International Symposium on Parallel Processing 1993]

92-05

1992

[Paper]

[Paper]

A Load Balancing Strategy For Prioritized Execution of Tasks [Workshop on Dynamic Object Placement and Load Balancing at ECOOP 1992]

90-11

1990

[Paper]

[Paper]

Distributed and Adaptive Dynamic Load Balancing Scheme for Parallel Processing of Medium-Grain Tasks [DMCC 1990]

89-08

1989

[Paper]

[Paper]

A Dynamic Scheduling Strategy for the Chare Kernel System [SC 1989]